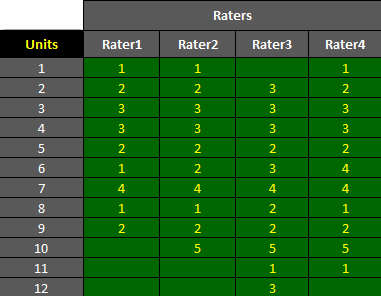

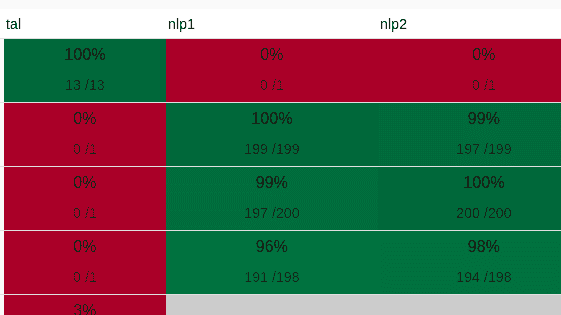

AgreeStat/360: computing weighted agreement coefficients (Conger's kappa, Fleiss' kappa, Gwet's AC1/AC2, Krippendorff's alpha, and more) for 3 raters or more

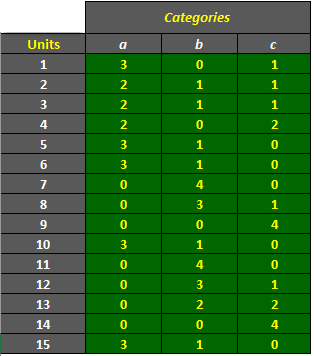

AgreeStat/360: computing weighted agreement coefficients (Fleiss' kappa, Gwet's AC1/AC2, Krippendorff's alpha, and more) with ratings in the form of a distribution of raters by subject and category

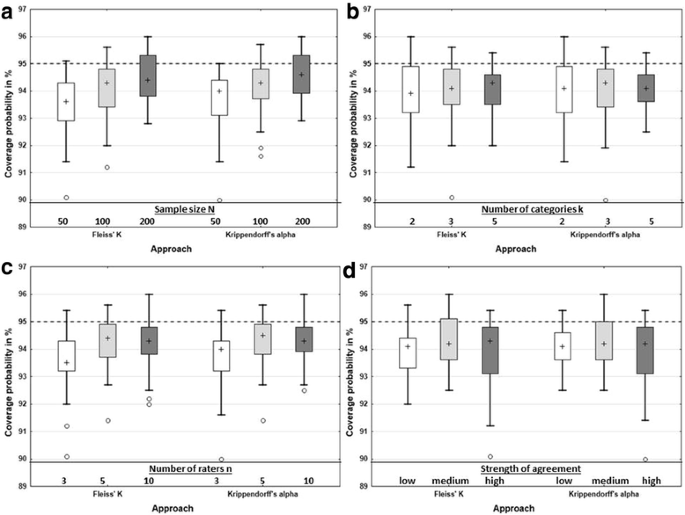

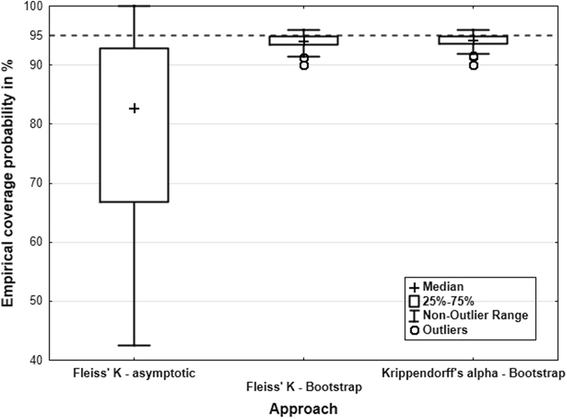

Measuring inter-rater reliability for nominal data – which coefficients and confidence intervals are appropriate? | BMC Medical Research Methodology | Full Text

Measuring inter-rater reliability for nominal data – which coefficients and confidence intervals are appropriate? | BMC Medical Research Methodology | Full Text

Measuring inter-rater reliability for nominal data - which coefficients and confidence intervals are appropriate? - Abstract - Europe PMC

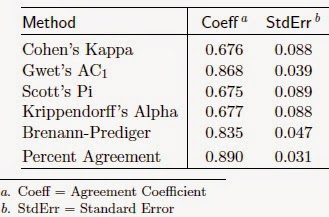

![PDF] On The Krippendorff's Alpha Coefficient | Semantic Scholar PDF] On The Krippendorff's Alpha Coefficient | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/90b246032379c922503fa8cdcfce56435a142148/5-Table2-1.png)

![PDF] On The Krippendorff's Alpha Coefficient | Semantic Scholar PDF] On The Krippendorff's Alpha Coefficient | Semantic Scholar](https://d3i71xaburhd42.cloudfront.net/90b246032379c922503fa8cdcfce56435a142148/12-Table4-1.png)